Today we're going to tackle a subjective topic: What is the best site audit/crawling software? Before we jump into the answer, it's safe to assume your goal is to conduct extremely thorough SEO site audits, leaving no stone unturned on your client's site.

Also, it's assumed that you intend to provide quantitative data to backup all your recommendations (after all, what good are SEO recommendations if you don't have hard data to back them up?). No two sites are alike and depending upon the goals for the campaign, a site audit/crawler program can provide tremendous insights and actionable items for your client or organization.

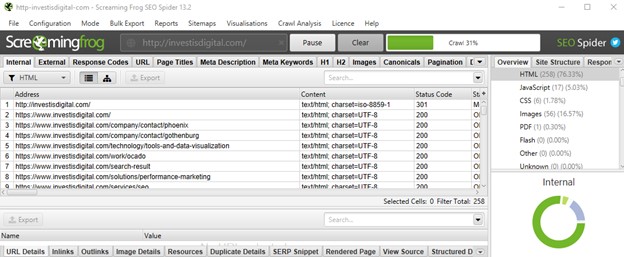

#1 Screaming Frog

This crawler is super handy. In fact, it's the go-to tool for most of our audits because of the easy data export options and customization settings. What makes it particularly useful is that the built-in tabs in the interface are built around a general SEO checklist including HTTP response codes, page titles, meta data, header tags, image optimization and more. A quick report/analysis can be generated and exported into .CSV format which provides a lot of useful, actionable data for most sites.

- Pros: Fast, intuitive desktop application with advanced spider configuration and export features.

- Cons: Memory maxes out on extremely large sites. However, the settings can be configured to streamline crawl rate.

- Cost: Free for up to 500 URIs (total files). $160 annually for the unlimited version.

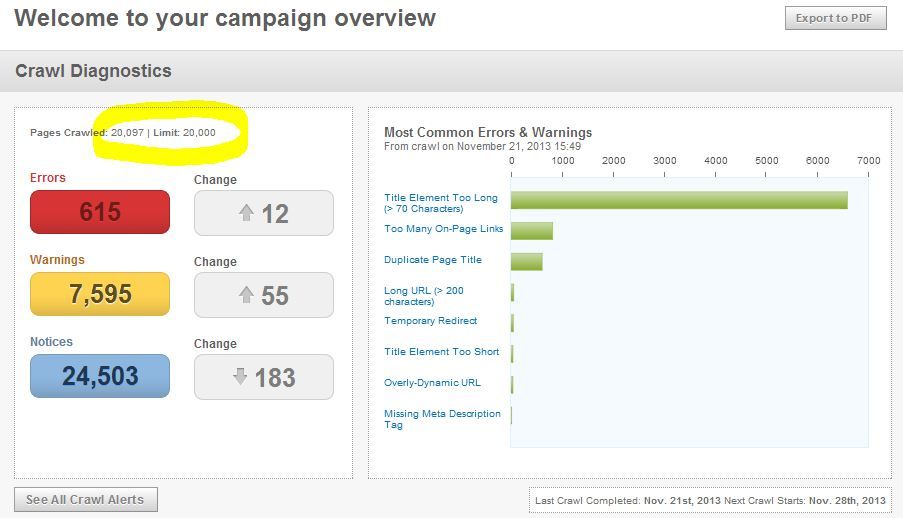

#2 MOZ Campaign

MOZ recently updated their software to MOZ Analytics. However, the classic Pro Campaign version of the crawler is still a favorite which provides intuitive insights into top issues including duplicate and near-duplicate titles and content. It's only major limitation is that you can't crawl very large sites (over 20,000 pages) but even on large sites it's useful to generate initial recommendations.

- Pros: Gives quick intuitive diagnostics of key issues including duplicate and near-duplicate content.

- Cons: Limited to 20,000 pages. Large sites take several days to crawl.

- Cost: Part of the Pro $99/mo subscription.

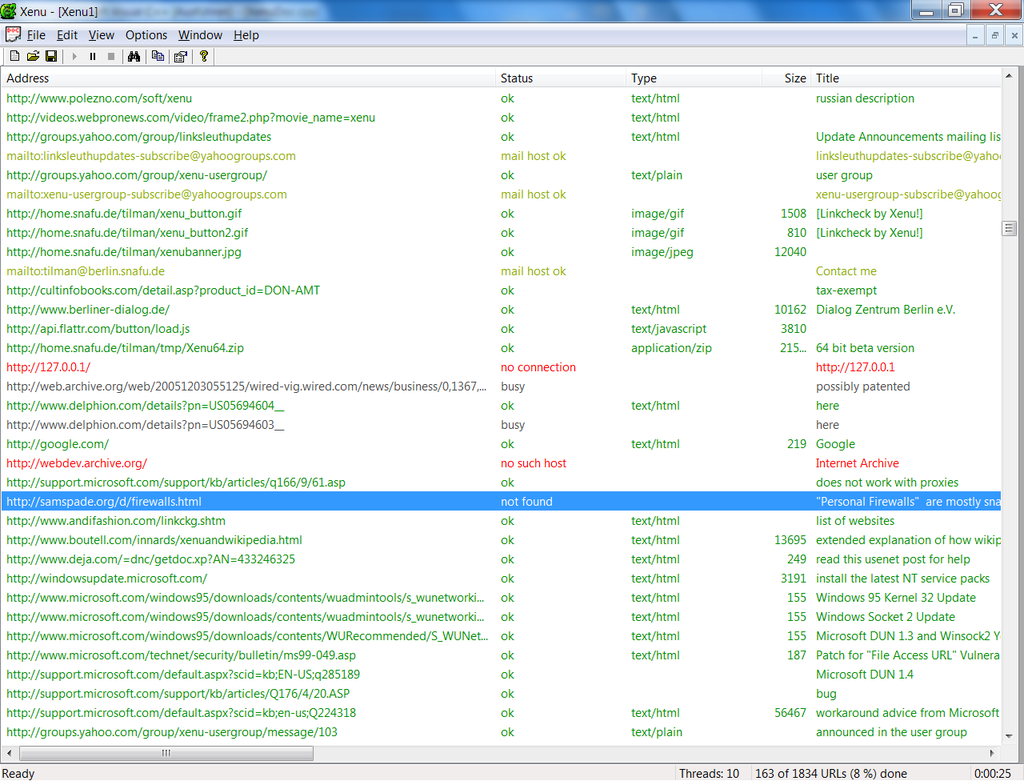

#3 Xenu's Link Sleuth

From time to time, your traditional crawlers can fail for various reasons. Perhaps the site has a crazy 403 redirect on the main index page URL or the site you want to crawl is extremely large and has redundant session IDs that aren't filtering out with Screaming Frog, even after adjusting the URL and exclusion settings (I speak from experience). Link Sleuth can be a valuable part of the back-up plan. Don't ask where the name came from or question its clunky web 1.0 interface. Just use this crawler as a fail-safe option when other choices fail.

- Pros: Fast, no-frills platform. Good backup when limitations arise with MOZ and Screaming Frog.

- Cons: Originally designed to locate broken links/404 errors. Clunky interface, design and export features.

- Cost: Free

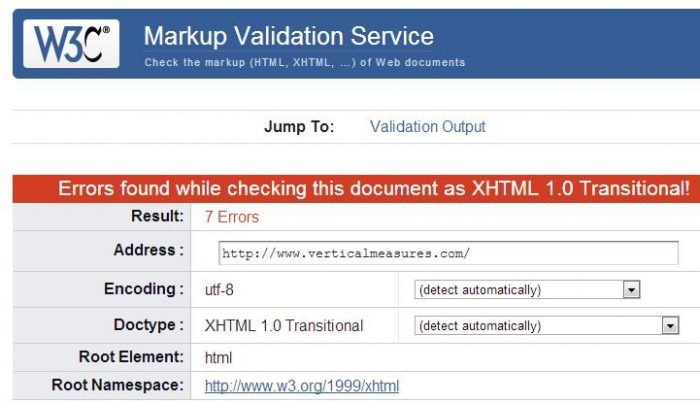

#4 W3C Validator

The W3C markup validation service isn't really a crawler. However it's a super quick and handy tool used to identify specific coding errors. Traditional site audits should generally be focused on fundamental site issues such as identifying crawl errors, thin or duplicate content, poorly written title tags, etc. However, coding and CSS errors can inhibit site performance also and shouldn't be overlooked.

- Pros: Quick, code-level errors and recommendations.

- Cons: Doesn't diagnose general crawl issues.

- Cost: Free

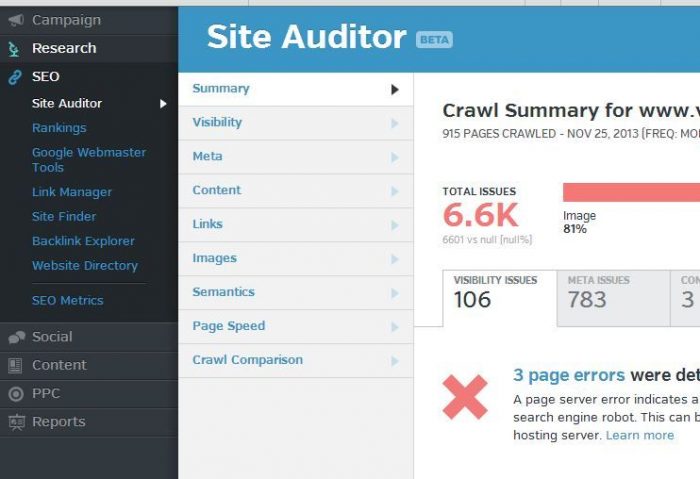

#5 Raven

The Raven Site Auditor tool is still in beta but it has some generally useful features such as tables which can be sorted by duplicate titles. Also, there's a handy color-coded indicator bar which highlights trouble spots on a site. Despite these nice features however, it does still lack the easy exporting functionality of other tools.

- Pros: In-depth link data (as expected from Raven).

- Cons: Limited to 10,000 pages crawled.

- Cost: Part of Raven Pro $99/mo account.

In summary, there are several great site audit programs available. Ultimately the best SEO crawling software depends on your specific campaign goals and client's needs. Screaming Frog and MOZ Pro Campaigns are particularly helpful because of their thorough site scanning capabilities and data export features.

However, it's wise to be prepared with additional software as a backup plan when your initial crawl doesn't go as planned. Ultimately, the best site auditing/crawling software is the one that yields the most comprehensive data and aids in supporting your key recommendations and a solid actionable plan for site improvements.